Depth sensing is fundamental to our understanding of and interaction with the three-dimensional world. It is also crucial in fields such as autonomous driving, drone control, and augmented reality. However, efficiently and accurately capturing detailed depth information has long been a challenge. Active depth sensing technologies like LiDAR and TOF cameras are limited by high power consumption, low resolution, and high costs, while multi-view stereo vision techniques face challenges of system complexity and occlusion.

In comparison, monocular depth sensing techniques can directly estimate depth from a single image without active light sources. They utilize relative depth cues such as perspective and occlusion in images, combined with absolute depth cues provided by the depth-dependent characteristics of the camera's point spread function (PSF). This approach offers higher efficiency and more compact system design.

However, the defocus PSF of traditional 2D cameras is symmetrical around the focal plane, making it impossible to unambiguously obtain absolute depth. While light field cameras avoid this ambiguity by converting defocus into disparity between sub-aperture images, their lower spatial resolution significantly reduces the perception of scene details. Moreover, both 2D and light field cameras are susceptible to optical aberrations when capturing depth information, further limiting the application of monocular depth sensing in real-world scenarios. Is it possible to develop a new type of monocular camera that can achieve high-precision and robust depth sensing?

Recently, a research team led by Academician Dai Qionghai and Associate Professor Qiao Hui from the Department of Automation at Tsinghua University proposed an integrated meta-imaging camera based on their previously developed meta-imaging sensor. They utilized this camera to achieve aberration-robust, high-precision monocular depth sensing. Through simulations and experiments, the team demonstrated that the meta-imaging camera could help monocular depth sensing be applied more reliably in various complex scenarios, greatly expanding its application prospects in fields such as autonomous driving and virtual reality.

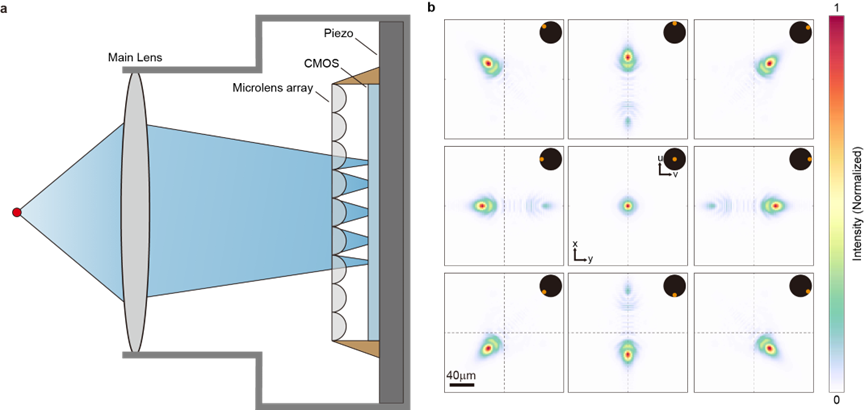

Figure 1. Construction of the meta-imaging camera.

a. The meta-imaging camera integrates a main lens, a microlens array, a CMOS sensor, and a piezoelectric stage. b. Images from different sub-apertures in the 4D PSF of the meta-imaging camera.

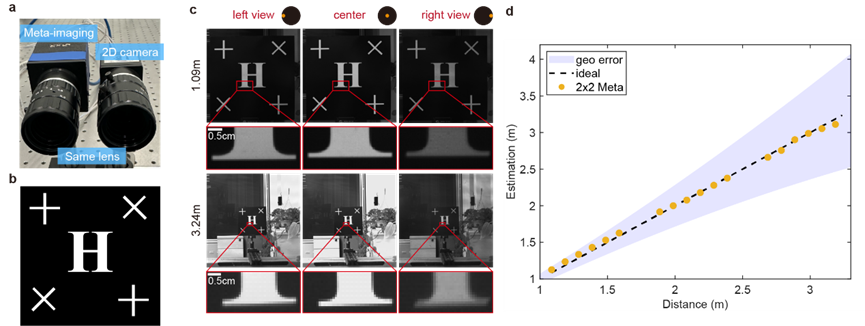

The research team constructed a meta-imaging camera integrating a main lens, a microlens array, a CMOS sensor, and a piezoelectric stage. This camera effectively overcomes the inherent trade-off between spatial resolution and angular resolution through a scanning mechanism, enhancing the spatial resolution of sub-apertures. Due to the improved spatial resolution, not only can the disparity between sub-apertures carry depth information, but the changes in PSF patterns within each sub-aperture can also carry certain depth information. Furthermore, high-resolution sub-aperture images enable the meta-imaging camera to achieve multi-site aberration correction through digital adaptive optics (DAO) technology. Consequently, it can efficiently capture depth information even in the presence of aberrations, ensuring accurate and robust depth perception. In depth estimation experiments with a letter board, the meta-imaging camera achieved an average absolute error of 0.03 meters and a relative error of 1.7% within a test range of 1 to 3.5 meters.

Figure 2. Prototype and depth estimation experiments.

a. A Meta-imaging camera and a 2D camera with identical lens and sensor parameters. b. Letter board marked with "H". c. Images of the letter board captured by the meta-imaging camera at different distances, with focus at 2.48 meters. d. Estimated depth of the board. "geo error" refers to the theoretical depth estimation accuracy solely based on geometric optics (disparity).

In addition to monocular depth sensing, the meta-imaging camera can also replace 2D cameras in current stereo vision systems, improving the depth sensing performance of these systems. Moreover, passive depth sensing methods, including monocular depth sensing and multiocular depth sensing, are limited in their accuracy and robustness for long-distance depth sensing due to dynamic optical aberrations caused by atmospheric turbulence. The meta-imaging camera, with its strong robustness against aberrations, compensates for this limitation, potentially further expanding the effectiveness of passive depth sensing in long-distance scenarios.