Continuous advancements in mesoscale in vivo microscopy and related imaging technologies have enabled large-field, high-resolution 3D imaging within living tissues, capturing long-term dynamics across entire organs. These datasets, often spanning from hundreds of micrometers to centimeter scales and involving thousands or even tens of thousands of cells over several hours or longer, can reach terabyte or even petabyte levels. They provide unprecedented opportunities to investigate complex biological processes such as immune responses, tissue repair, and neural activity. However, they also present substantial challenges. Efficiently extracting biologically meaningful information from such massive 3D spatiotemporal datasets and achieving rapid, accurate, and stable tracking of large-scale cell morphologies and trajectories remain key bottlenecks limiting the application of high-throughput mesoscale in vivo imaging.

Three-dimensional cell tracking faces three major challenges: stable cell detection, reliable inter-frame linking, and computational efficiency. In conventional detect-and-track approaches, the model must first localize each cell in every frame and then establish links between frames. To achieve long-term continuous tracking, both detection and linking must maintain high accuracy across tens of thousands of frames. However, producing high-quality 3D annotations is difficult due to spatial non-uniformity, low axial resolution, and variability in imaging parameters, which together constrain model generalization. Moreover, cells in 3D imaging often undergo division, collision, and non-rigid deformation between adjacent frames, making consistent feature correspondence difficult. Relying solely on fixed-size windows or single descriptors cannot ensure stability. Although global optimization strategies can alleviate dependence on local annotations, they require processing entire sequences, leading to heavy computational costs and long latency that may take days or even weeks for a single dataset. Therefore, within the context of large-scale 3D imaging, developing a general method that minimizes annotation requirements while maintaining high linking accuracy has become a central challenge.

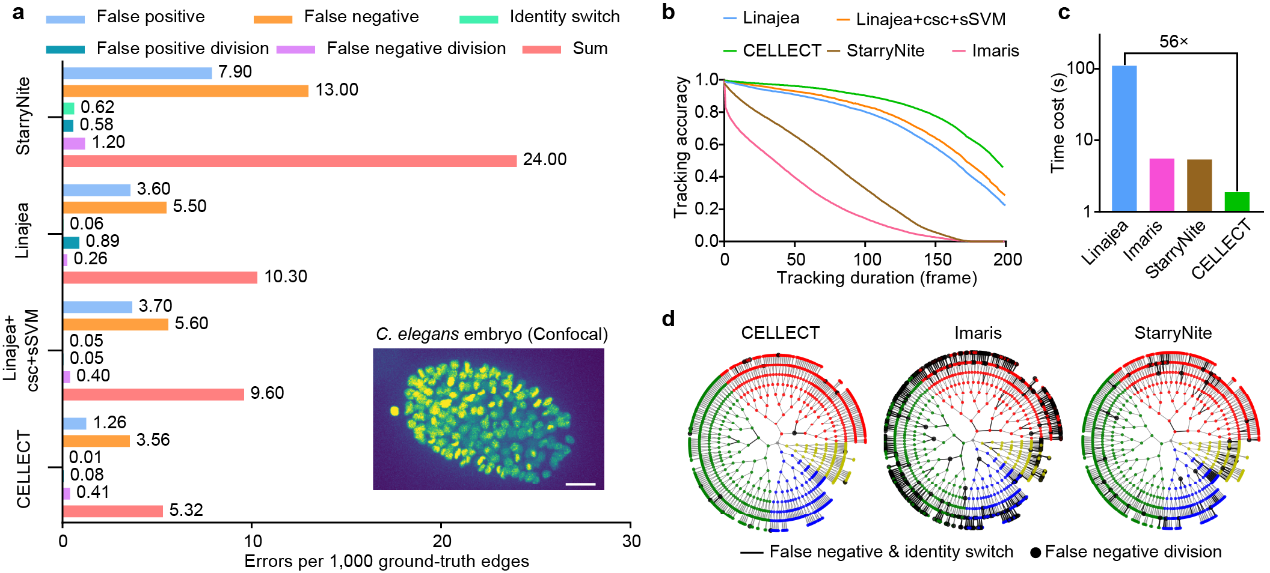

To address these issues, the team led by Qionghai Dai and Jiamin Wu at Tsinghua University proposed CELLECT (Contrastive Embedding Learning for Large-scale Efficient Cell Tracking), providing a new framework for 3D cell tracking. CELLECT introduces the concept of contrastive learning into 3D tracking for the first time, operating directly on cell centers and learning representations at the level of individual centroids. Within its architecture, CELLECT employs a multi-level center detection module that simultaneously predicts cell locations, segmentation regions, size information, and embedding vectors. This design allows image-based detection and cell-based contrastive learning to be jointly optimized within a unified framework. As a result, variations in cell morphology and size in 3D space no longer limit detection or linking performance. The model extracts all cell centers and their embeddings in a single inference step, and linking between adjacent frames is achieved by measuring distances between embedding vectors. During training, CELLECT requires only sparse center annotations and learns to minimize the feature distance of the same cell over time while maximizing those between different cells in the latent representation space. This enables efficient and robust trajectory linking with minimal annotation. The center-driven strategy substantially reduces the difficulty of 3D labeling, mitigates generalization loss caused by differences in imaging parameters, and maintains stability under blurred boundaries and morphological variability. CELLECT demonstrates strong robustness and generalization across datasets and imaging modalities. The method achieves a processing speed 56x faster than Linajea, one of the most accurate existing tracking algorithms, and ranks first in both tracking and segmentation tracks of the Cell Tracking Challenge on C.elegans developing embryo datasets (team name: THU).

Figure 1. Comparative evaluation of CELLECT. a, Errors ratio. b, tracking accuracy. c, Processing speed. d, lineage tracking performance.

After validating its baseline performance, the research team further examined the cross-modality and cross-tissue applicability of CELLECT. Given that annotations suitable for training are difficult to obtain in most in vivo experiments, all subsequent tests were conducted without any additional annotation or retraining. Instead, the pretrained model on the mskcc-confocal dataset was directly applied. The results show that CELLECT maintains stable performance across entirely different imaging modalities, tissue structures, and biological contexts. This zero-transfer-cost property enables researchers to apply CELLECT directly to large-scale 3D datasets without the need for retraining, significantly lowering the technical barrier for automated tracking and establishing a solid foundation for its broad application in life sciences.

Figure 2. Representative segmentation and center-detection results of CELLECT on image dataset of scanning light-field microscopy. Left: raw image. Middle: segmentation confidence map. Right: center-detection confidence map.

Building upon validated performance and cross-modality generalization, the team further applied CELLECT to challenging real experimental datasets to evaluate its applicability to representative biological problems. Three large-scale 3D imaging scenarios were selected: germinal center formation of B cells in mouse lymph nodes, interactions between immune cells and bacteria in the mouse spleen, and neuronal activity in the Drosophila brain under tissue deformation. These datasets represent typical applications in immunology and neuroimaging, each involving distinct experimental challenges, thus providing an ideal platform to comprehensively assess the robustness and versatility of CELLECT under complex biological conditions.

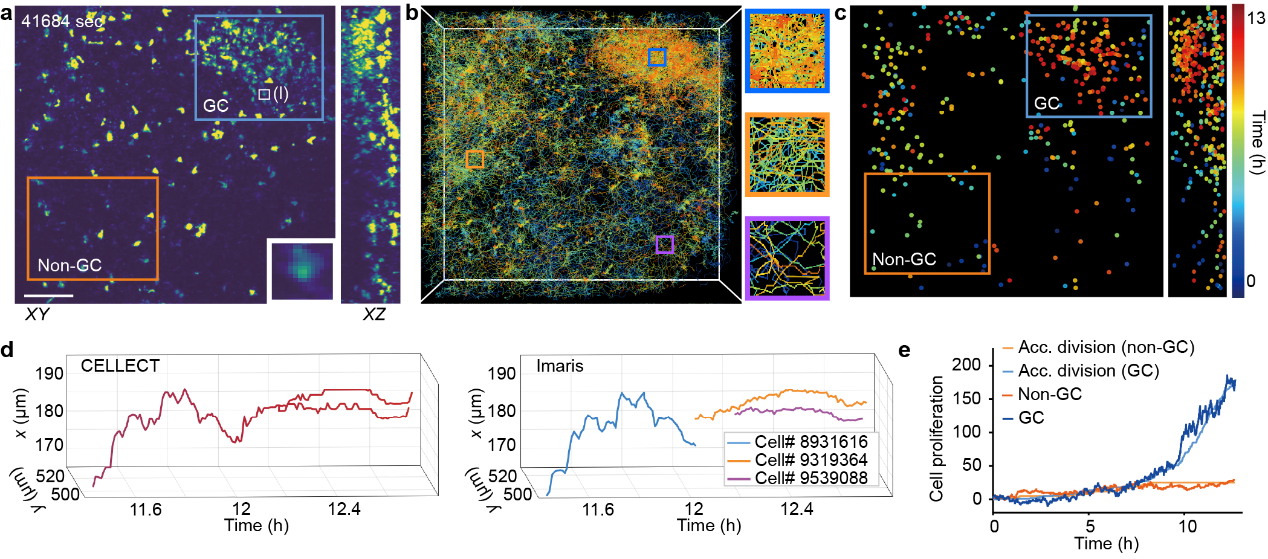

In real immunological datasets, CELLECT was applied to long-term 3D imaging of mouse lymph nodes to analyze the formation of germinal centers. Germinal centers are key sites of B cell proliferation, differentiation, and selection during immune responses. They contain densely packed, highly motile cells that make tracking challenging. The researchers acquired a 1,352-frame, approximately 260 GB dataset using two-photon synthetic aperture microscopy. Conventional methods often fail in such conditions, producing fragmented or incorrect tracks due to dense cell populations and frequent divisions. Without additional annotation, CELLECT successfully reconstructed continuous trajectories of cells within the germinal center and accurately identified cell division events. Unlike traditional software that frequently produces segmentation errors during division, CELLECT maintained trajectory continuity and integrity. This capability not only lowers the data analysis barrier in immunological studies but also enables systematic quantification of germinal center dynamics, providing a powerful tool for understanding adaptive immune responses.

Figure 3. Automated tracking of germinal center formation and cell division events of in the mouse lymph node during immune response.

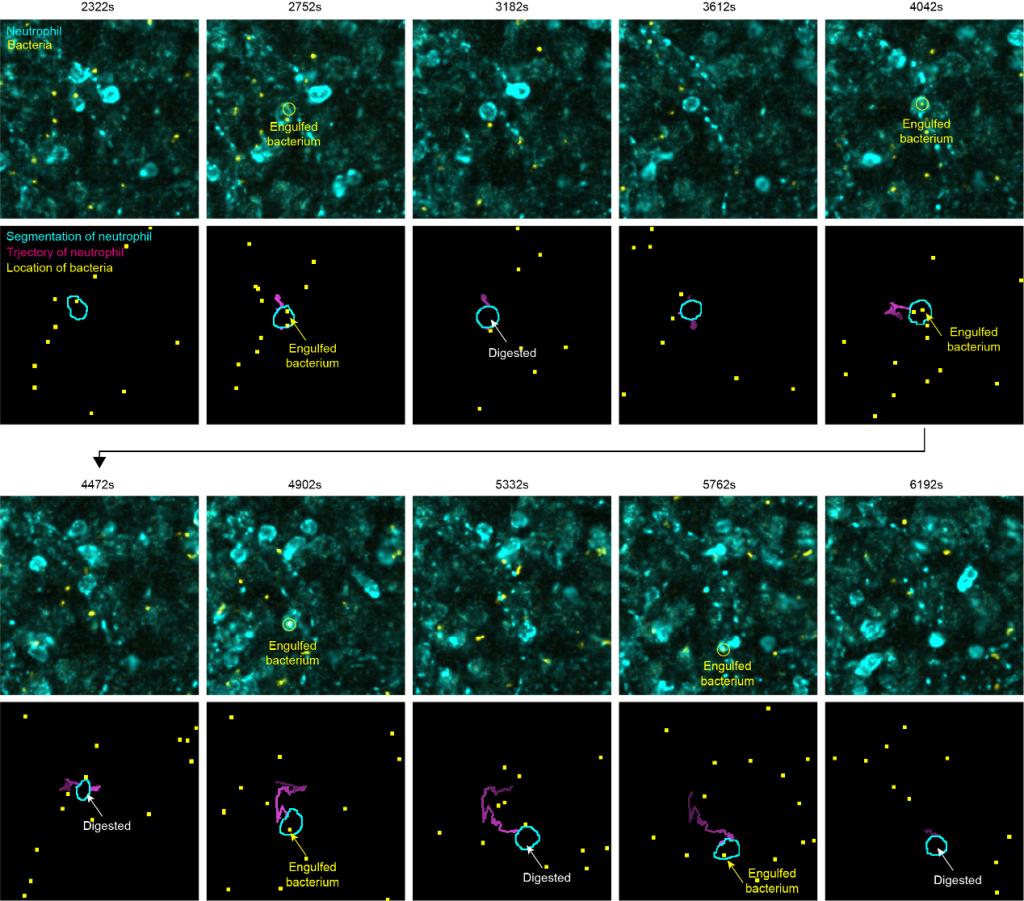

In the multichannel 3D imaging data of mouse spleen, the experimental subjects included membrane-labeled neutrophils and macrophages, together with bacterial signals. Membrane labeling often produces cells with indistinct boundaries and irregular morphologies, posing additional challenges for detection and tracking. Without any parameter adjustment, CELLECT reliably detected and tracked multiple object types simultaneously and automatically identified key events in long-term image sequences. The model maintained trajectory continuity while clearly labeling interactions between different objects, demonstrating strong robustness and adaptability in complex multichannel imaging scenarios.

Figure 4. Representative result of automated segmentation and tracking of a neutrophil engulfing bacteria.

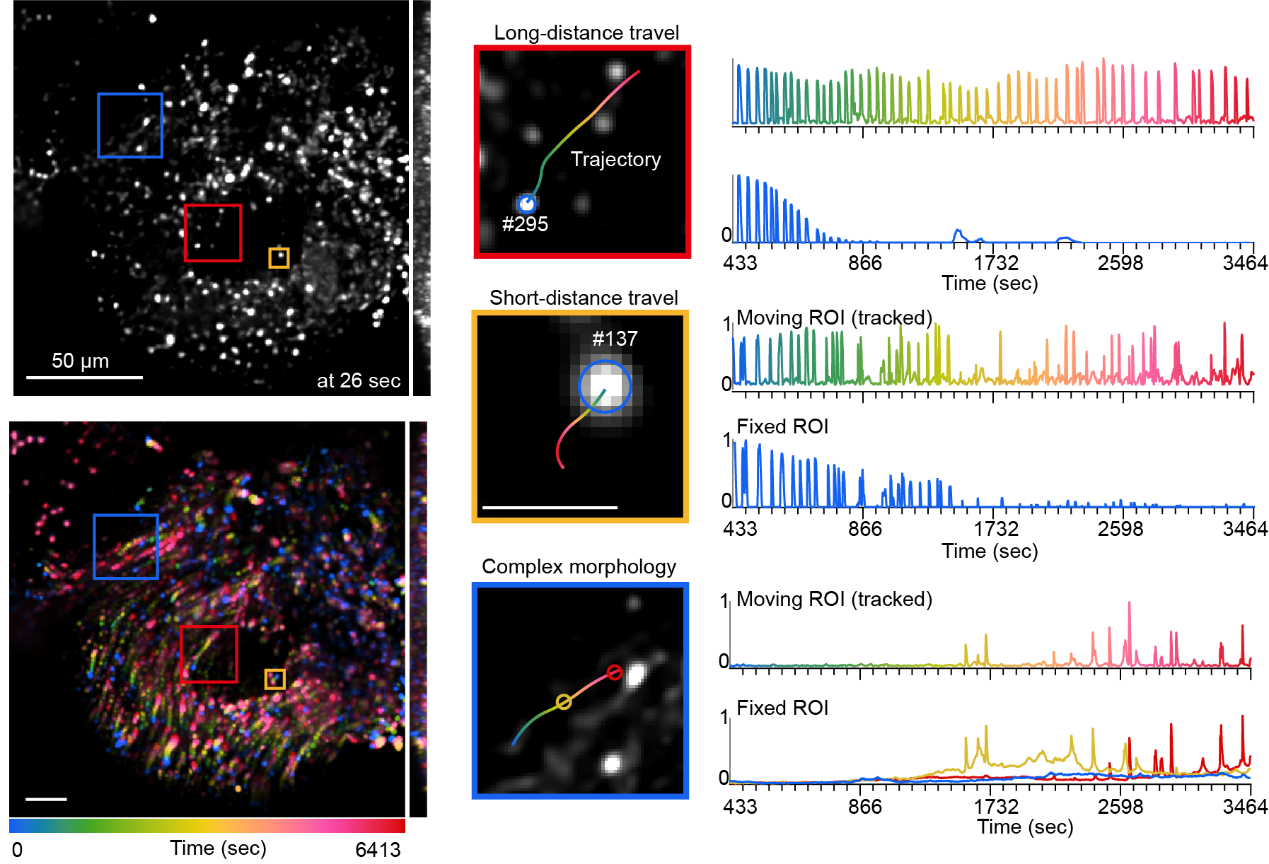

In long-term 3D imaging of the Drosophila brain, external interventions such as cranial surgery caused tissue collapse and ongoing non-rigid deformation during imaging, resulting in continuous positional shifts of neurons and increasing the difficulty of trajectory maintenance. Moreover, neuronal signals in functional imaging exhibited intermittent fluorescence, appearing only at high-intensity moments and becoming nearly invisible at low-signal periods, which further complicated detection and tracking. Across 14,800 frames (approximately 6,400 seconds), CELLECT successfully maintained the integrity of hundreds of neuronal trajectories (restricted to neurons visible in the first frame) and accurately extracted calcium signals throughout the sequence. Compared with conventional software, CELLECT achieved several-fold higher tracking accuracy even after applying 2,000-frame reconnection optimizations and maintained both trajectory continuity and signal stability despite severe tissue deformation and fluorescence fluctuations, demonstrating remarkable robustness and practical utility in complex neural environments.

Figure 5. Tracking performance under Drosophila brain tissue deformation. Upper left: raw image; lower left: temporal-color coded projection image; right: representative traces of transient calcium activity of neurons with and without tracking.

Through validation in three representative biological scenarios covering immunology and neuroscience, CELLECT demonstrates excellent performance and broad applicability under complex conditions. Whether in densely packed and frequently dividing germinal centers, multichannel spleen datasets involving interacting immune cell types, or long-term imaging of Drosophila brains with tissue collapse and fluctuating signals, CELLECT consistently maintains trajectory integrity and signal stability without additional annotation or parameter tuning. These results overcome long-standing bottlenecks in 3D annotation and computational efficiency and establish a contrastive learning driven, center-based embedding strategy as a general and effective framework for large-scale automated biological image analysis.

The research team stated that future work will continue to optimize and refine the performance of CELLECT, improving both usability and functional scalability. The method will gradually evolve into an open and general platform for large-scale mesoscale data analysis. This direction is expected to promote a transition in cell dynamics research from single experiments to large-scale, multidimensional, and cross-scenario data-driven studies. It will also help establish a unified analytical paradigm across diverse research fields and provide new approaches for uncovering the dynamic principles of living systems and the mechanisms of complex diseases.

Hongyu Zhou, a postdoctoral researcher, and Seonghoon Kim, a research scientist from the Department of Automation, Tsinghua University, are co-first authors of the paper. Prof. Qionghai Dai and Prof. Jiamin Wu, from the Department of Automation, the Institute of Brain and Cognitive Sciences, and the Tsinghua-IDG/McGovern Institute for Brain Research, are co-corresponding authors. This work was supported by the National Natural Science Foundation of China (NSFC).

Original article:https://www.nature.com/articles/s41592-025-02886-x

References:

Hirsch, P. et al. Tracking by weakly-supervised learning and graph optimization for whole-embryo C. elegans lineages. Medical Image Computing and Computer Assisted Intervention (MICCAI) 25–35 (2022).

Malin-Mayor, C. et al. Automated reconstruction of whole-embryo cell lineages by learning from sparse annotations. Nature Biotechnology 41, 44–49 (2023).

Maška, M. et al. The Cell Tracking Challenge: 10 years of objective benchmarking. Nature Methods 20, 1010–1020 (2023).

Zhao, Z, et al. Two-photon synthetic aperture microscopy for minimally invasive fast 3D imaging of native subcellular behaviors in deep tissue. Cell (2023).

An, H. et al. Splenic red pulp macrophages eliminate the liver-resistant Streptococcus pneumoniae from the blood circulation of mice. Science Advances 11, eadq6399 (2025).

Fan, J, et al. Prominent involvement of acetylcholine dynamics in stable olfactory representation across the Drosophila brain. Nature Communications 16, 8638 (2025).