Perfect optical imaging, one of the ultimate goals of human perception of the world, is fundamentally constrained by errors in mirror fabrication and complex environmental disturbances causing optical aberrations. The photonics team uniquely proposed an integrated meta-imaging sensor architecture to carve a new path for solving the aberration challenge. Instead of constructing perfect lenses, the research team took a different approach by developing a super sensor that records the imaging process rather than the image itself. By achieving ultra-fine perception and fusion of incoherent complex light fields, perfect three-dimensional optical imaging can still be achieved, even through imperfect optical lenses and complex imaging environments. The team has overcome core technologies of ultra-fine light field perception and ultra-fine light field fusion, breaking through spatial bandwidth bottlenecks with distributed sensing and achieving multi-dimensional, multi-scale high-resolution reconstruction with self-organized fusion. This technology can replace physical analog modulation in traditional optical systems with digital modulation of light, enhancing precision to the optical diffraction limit. This technique does not require changes to existing optical imaging systems, addressing long-standing optical aberration bottlenecks, and is poised to become the next-generation universal imaging sensor architecture, bringing disruptive changes to fields such as astronomical observation, biological imaging, medical diagnostics, mobile devices, industrial inspection, and security monitoring.

Figure | Left,Wavefront sensor;Right,Deformable mirror

Traditional optical systems are mainly designed for the human eye, maintaining a "what you see is what you get" design philosophy, focusing on achieving perfect imaging on the optical end. However, due to manufacturing limitations and complex environmental disturbances, it is still difficult to manufacture perfect imaging systems. To address this challenge, adaptive optics technology emerged, where people use wavefront sensors to perceive environmental aberrations in real-time and feedback to a deformable mirror array, dynamically correcting the corresponding optical aberrations to maintain a perfect imaging process. However, since aberrations are highly non-uniformly distributed in space, this technology can only achieve high-resolution imaging over a very small field of view and cannot simultaneously correct aberrations over large fields and multiple regions. Also, traditional adaptive optics require very complex systems, are costly, and have highly limited application scenarios.

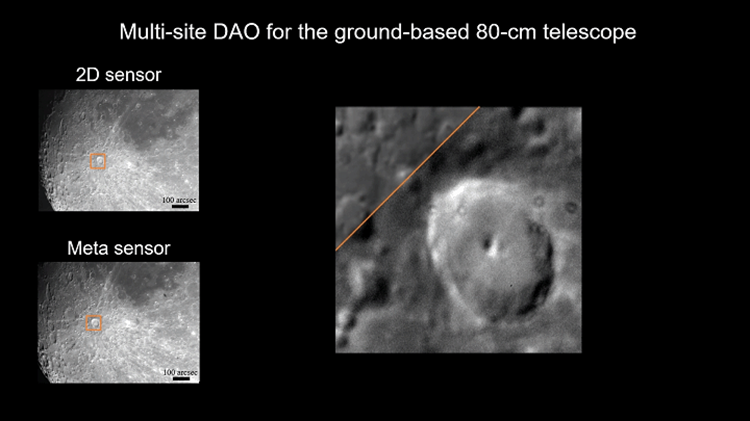

The meta-imaging sensor provides a scalable, distributed solution to the optical aberration challenge from the sensor level, enabling high-performance imaging with a very simple optical system. On a regular single-lens system, digital adaptive optics have achieved billion-pixel high-resolution imaging, reducing the resolution, cost, and size of optical systems by more than three orders of magnitude. Further, the research team tested this on the Tsinghua-NAOC 80cm telescope at Xinglong Observatory of the National Astronomical Observatories of China, significantly enhancing the resolution and signal-to-noise ratio of astronomical imaging, increasing the adaptive optics correction field of view from the traditional 40 arcseconds to 1000 arcseconds. Additionally, the meta-imaging sensor can also capture depth information, offering higher lateral and axial positioning accuracy than traditional light field imaging methods, providing a low-cost solution for autonomous driving and industrial inspection. In the future, the research team will further explore the meta-imaging architecture, fully leveraging its superiority in different fields, and establishing a new generation of universal imaging sensor architecture, thus bringing a disruptive improvement in 3D perception performance.

The above achievements were published in the journal Nature on October 19, 2022, under the title "An integrated imaging sensor for aberration-corrected 3D photography" and were recognized among the Top Ten Optical Advances in China in 2022.

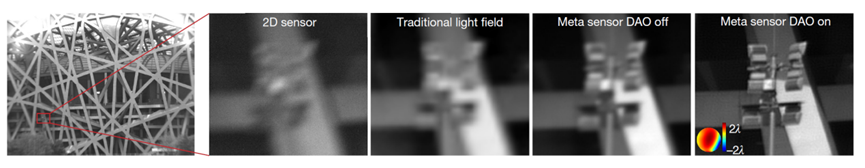

Figure| From left to right:2D sensor、Traditional light field imaging、Meta-imaging sensor(w/o DAO),Meta-imaging sensor(DAO)results comparison

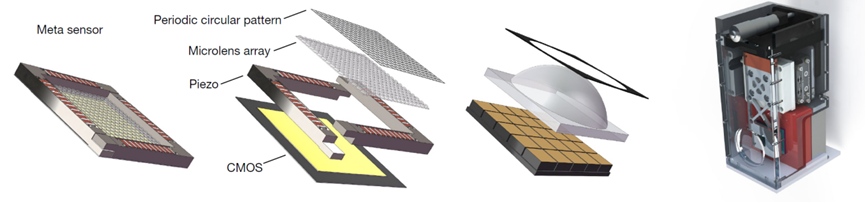

Figure | Meta-imaging sensor concept demo and architecture

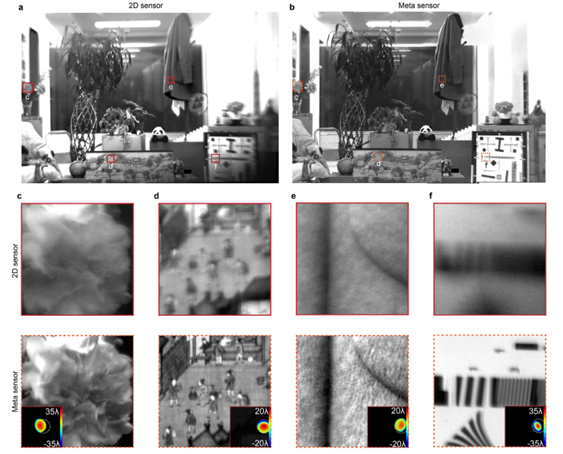

Figure | Giga-pixel imaging with single lens a. Imaging results from normal 2D imaging sensor;b. Imaging results from meta-imaging sensor. c-f. Comparisons between the zoom-in regions from a and b.

Figure | Multi-site DAO for the ground-based 80-cm telescope.

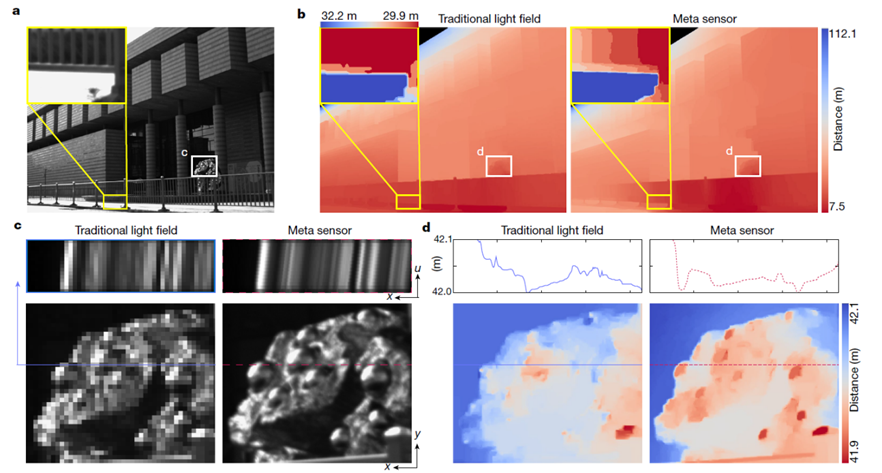

Figure | a. Comparison of 3D reconstruction results at Tsinghua University Art Museum. b. Comparison between traditional light field imaging and meta-imaging results; under the same algorithms, meta-imaging has higher positioning accuracy. c. Local magnification comparison of traditional light field imaging and meta-imaging results in the spatial domain and EPI domain. d. Local magnification comparison of 3D reconstructions by traditional light field imaging and meta-imaging chip.