01 Introduction

Recently, the Imaging and Intelligent Technology Laboratory from the Department of Automation and the Intelligent Sensing Integrated Circuit and System Laboratory from the Department of Electronic Engineering at Tsinghua University have jointly proposed an all-analog photo-electronic integrated computing chip. This chip has, for the first time globally, demonstrated that photo-electronic computing can achieve system-level performance exceeding that of high-performance GPUs by over three thousand times in computing speed and over four million times in energy efficiency. This breakthrough proves the superiority of photonic computing in various AI tasks. The relevant results were published in Nature under the title "All-analog photo-electronic chip for high-speed vision tasks."

02 Research Background

The current mainstream criterion for improving computing performance, Moore's Law, has slowed down and is nearing obsolescence over the past decade. The size of electronic transistors is approaching physical limits. Thus, enhancing computing speed and energy efficiency is urgent, with new computing architectures being key to breakthroughs. Photo-electronic computing, with its extremely high parallelism and speed, is considered a strong contender for future disruptive computing architectures. Over the years, renowned research teams worldwide have proposed various photo-electronic computing architectures. However, there are still several key bottlenecks to directly replacing existing electronic devices with photo-electronic computing chips for system-level applications:

1.Integrating large-scale optical computing units (controllable neurons) on a single chip while controlling the degree of error accumulation.

2.Achieving high-speed, high-efficiency on-chip nonlinearity.

3.Providing efficient interfaces between optical computing and electronic signal computing to be compatible with the current electronically-driven information society. Currently, the energy required for a single analog-to-digital conversion is several orders of magnitude higher than the power consumption per step of multiplication and addition in optical computing, masking the performance advantages of optical computing and making it difficult for optical computing chips to truly compete in practical applications.

03 Research Innovations

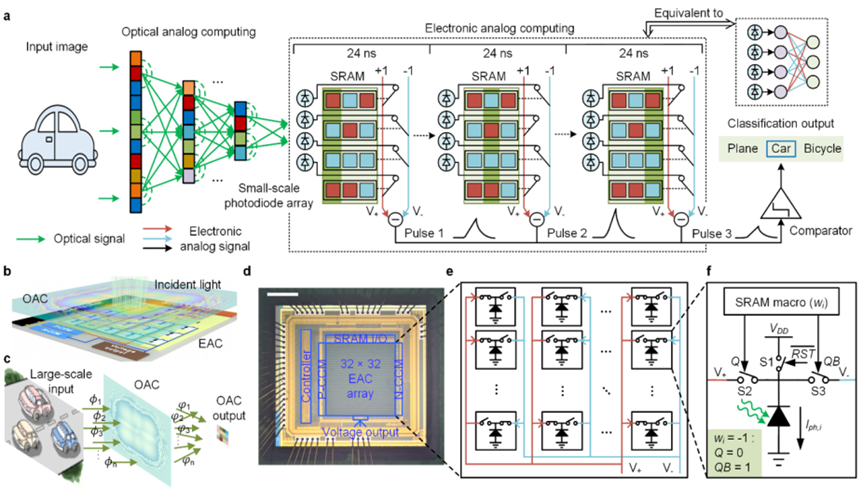

To address these international challenges, the team creatively proposed a computing framework combining analog electronics with analog optics. This framework integrates a large-scale diffractive neural network for visual feature extraction with purely analog electronic computing based on Kirchhoff's laws on a single chip. This approach bypasses the physical bottlenecks of ADC speed, precision, and power consumption, overcoming three key bottlenecks: large-scale integration of computing units, efficient nonlinearity, and high-speed optical-electronic interfaces on a single chip. In tasks such as three-category ImageNet, the ACCEL chip system achieved a system-level computing speed of 4.6 Peta-OPS, which is over 400 times that of existing high-performance optical computing chips and over 4000 times that of analog electronic computing chips. The system-level energy efficiency of ACCEL reached 74.8 Peta-OPS/W, enhancing efficiency by two thousand to several million times compared to existing high-performance optical computing, analog electronic computing, GPU, and TPU architectures.

Figure|The architecture and working principles of ACCEL

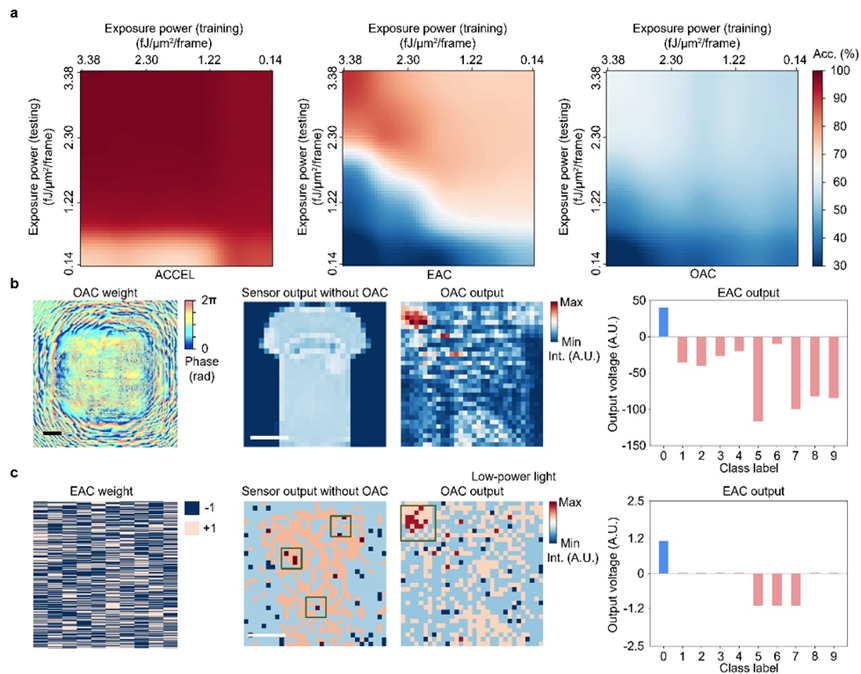

Further, in ultra-high-speed visual computing tasks such as real autonomous driving, ultra-high computing speed inevitably accompanies extremely short exposure times per frame, leading to ultra-low exposure energy. The research team proposed another system-level solution: a joint design based on the complementary physical advantages of optical and electronic modules. While the optical module extracts features in parallel, it learns in an unsupervised manner to encode the way light intensity converges to certain feature points, enhancing local light intensity under extremely low total light intensity, thereby improving the signal-to-noise ratio of the corresponding photodiodes. Simultaneously, leveraging the reconfigurability of the electronic module, the team developed adaptive training algorithms to effectively correct processing and alignment errors of the preceding multiple layers of optical modules. They also introduced modeling of low-light noise environments during training. Results in the paper show that the ACCEL chip significantly improved accuracy under various light intensities, especially low light, compared to single optical computing or analog electronic computing modules. Additionally, the paper demonstrated experiments using incoherent light to directly compute traffic scenes, determining the direction of vehicle movement. Incoherent light computing, lacking phase relationships, is more susceptible to noise, preventing high-performance optical computing from being directly applied in practical scenarios like autonomous driving and natural scene perception. The ACCEL chip innovatively proposed using noise robustness to achieve incoherent light computing, enabling calculations under incoherent light illumination from devices like mobile phone flashlights, with video demonstrations provided.

04 Summary and Outlook

This work not only proposes a disruptive chip architecture with remarkable performance but also offers engineering solutions to a series of bottleneck issues that hinder the implementation of optical computing. It uniquely suggests that the future development of optical computing may not solely pursue an "all-optical" architecture but rather achieve a deep integration of optical computing with the digital society, complementing each other. This approach makes it truly feasible for optical computing chips to transition from theoretically high computing speed and energy efficiency to practical system-level applications in complex visual scenarios. Academician Dai Qionghai, Associate Professor Fang Lu, Associate Researcher Qiao Fei, and Assistant Professor Wu Jiamin from Tsinghua University are the corresponding authors of this paper; Ph.D. students Chen Yitong, Maimaiti Nazamaiti, and Dr. Xu Han are the co-first authors; Dr. Meng Yao, Assistant Researcher Zhou Tiankuang, Ph.D. student Li Guangpu, Researcher Fan Jingtao, and Associate Researcher Wei Qi also participated in this research. This project was supported by the Ministry of Science and Technology's 2030 "New Generation Artificial Intelligence" major project, the National Natural Science Foundation of China's Outstanding Youth Science Fund, and the Basic Science Center project.

Figure|ACCEL performance under different exposure intensity and tasks